Discoveries Weekly No. 14 (July 13-July 19, 2020)

Here are some discoveries that fascinate me this week.

- Good scientific software and reproducible workflow

- Computational biology

- Programming

- Other gems

- Sentence of the week

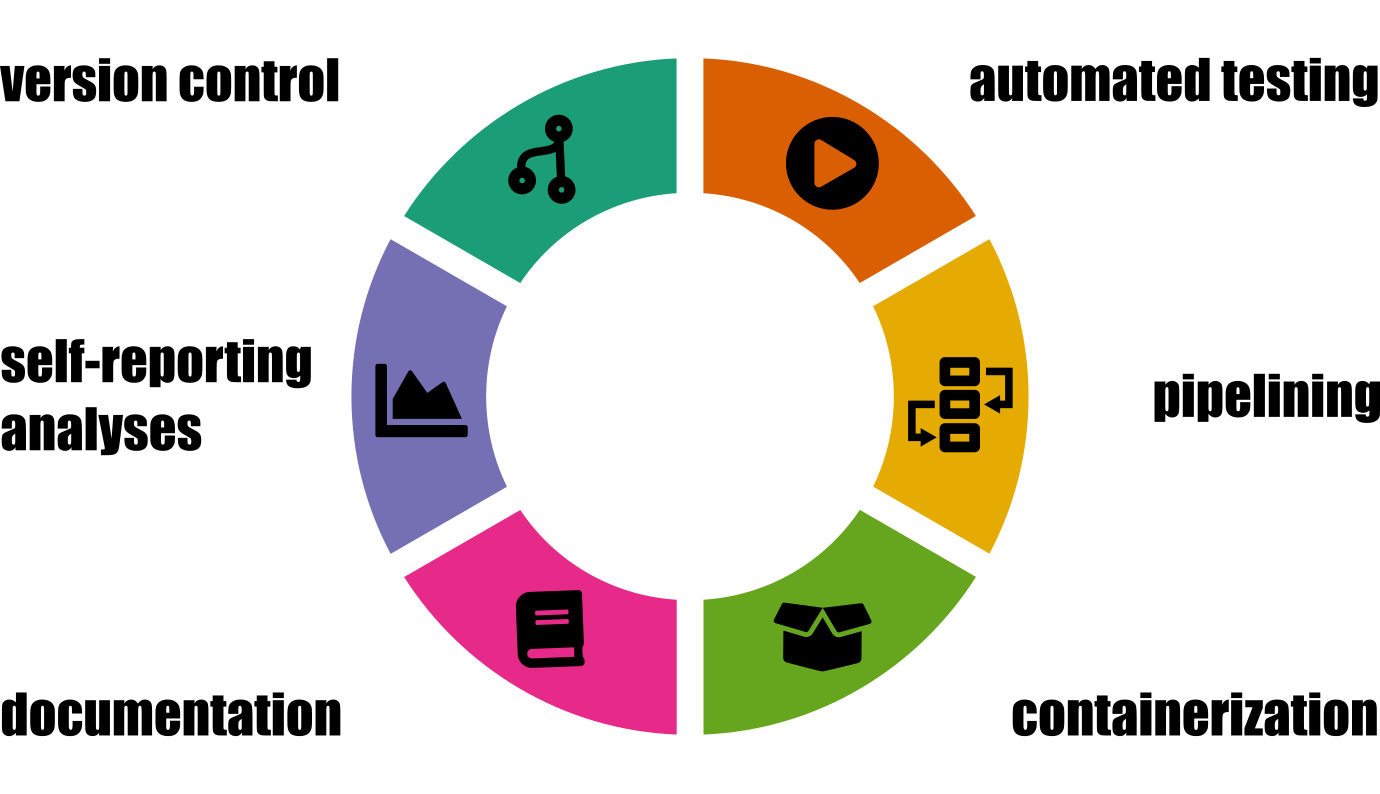

Good scientific software and reproducible workflow

Gregor Sturm wrote a blog post Hallmarks of Good Scientific Software. I recommend it to every one working with scientific software, let it be software packages and data analysis reports.

Workflow systems play an ever more important role in computational biology. dib-lab/2020-workflows-paper is a repository underlying the bioRxiv preprint Streamlining data-intensive biology with workflow systems. A related publication is Open collaborative writing with ManuBot.

For those who want to learn the tools, here is an interesting course provided by NIBS Sweden: Tools for reproducible research. It covers Git, conda, Snakemake, Jupyter, R Markdown, Docker, Singularity, and tips about making a project reproducible. These are very important issues indeed. I think if we can complement them with good coding practice for Python and R, as well as tools for productivity, a few other tools like NextFlow (see seqeralabs/nextflow-tutorial for a tutorial of NextFlow), including job managers like Slurm, and introduce how to find information efficiently using resources like StackOverflow and BioStar and how to store and retrieve them efficiently (for instance using Zettelkasten, see below), we may have a very reasonable introductory course to modern scientific programming in computational biology.

There we see a trend of shifting focus of from algorithms to programs and workflows. As Derek Jones put it: algorithms are now commodities. He talked about, I believe, a general observation in the programming world. It also applies, at least to some extent, to scientific programming. Whereas bioinformaticians and (if there were any) computational biologists twenty years ago must master algorithms and data structures, today they have to pay more focus on workflows, choosing tools, or the ecosystem. It does not mean that algorithms are not important any more. They matter a lot particularly if one’s wish is to make ground-breaking discoveries and to push the boundaries of possibilities. Nevertheless, for average and most users of bioinformatics and computational biology tools, it comes more and more to the question which to use instead how to code.

Computational biology

Analysing single-cell T-cell receptor sequencing data with Scirpy

See my other blog post, which introduces Scirpy and reviews basic biology of antigen presentation.

Embedding of transcription factors and binding sequences with BindSpace

Yuan et al., Nature Methods, 2019 reported their effort to embed transcription factors and binding sequences in the same space, known as BindSpace. The prediction model they built was trained by binding data of TFs and embedding over one million sequences. The authors claimed that the model achieved state-of-the-art multi-class binding prediction performance, and can distinguish signals of closely related TFs.

Human transcription factors that control viral expression

Liu et al. reviewed 419 human virus transcriptional regulators that control expression of virus of 20 families. This is a complementary read to Lambert et al., Cell 2018, Human Transcription Factors.

Other gems in computational biology

- Illumina/Pisces, a small variant calling application by Illumina, GPL-3 license.

- Population genetics and genomics in R, a online tutorial by Grünwald et al.

- nf-core/sarek, a NextFlow workflow to detect genetic variants with sequencing data.

- Difference between gVCF and VCF formats

- snippy, a command-line too for variant calling of haploid (bacterial and viral) genomes. From the same author Torsen Seemann, prokka is a tool for rapid annotation of prokaryotic genomes.

Programming

Profiling and parallelization in R

This week I tried a few options to profile my R code and parallelize some of

them. I used the profvis

(rstudio/profvis on GitHub) package to

profile R code.

My code using parallel::mclapply run forever on a high-performance cluster

(HPC) head server but finished very quickly in cluster servers. It seems that I

am not the only one suffering from that. Programs relying on parallel and

BiocParallel, for instance zinbwave, seem to also have problems of

multithread/multicore running, particularly in a cluster setting. Some recent

discussions are here on GitHub,

drisso/zinbwave.

In my case, it turned out the code runs comparably fast without parallelization. So for now I set parallelization as as optional. Maybe later I will revisit that. For sure I have more to learn.

A few relevant resources of parallelization in R:

- See this blog post by Edwin Thoen on running parallel jobs in RStudio.

- According to this Q&A on

StackOverflow,

do not use two layers of

mclapply. Use it in the outer loop rather than in the inner loop. Do not use all cores/threads, useN-1orN/2. - More about parallelization in R can be found in this blog post by Michael Hallquist.

Singularity

Singularity is a program to create and manage portable container images. It can be used to make scientific software and code reproducible and run virtually anywhere.

Conceptually it is closely related with Docker. The biggest advantage of

Singularity compared with Docker is that it does not require sudo permission,

which normally we do not have when we work on HPCs. There are other advantages

as well. See discussions on reddit Why singularity is preferred on

high-performance clusters (HPCs) over

Docker.

Singularity 3+ is written in the GO programming language. To install Singularity, one needs to install the go compiler first. After it is done, one can follow The Quick Start to download, compile, install, and use Singularity.

Cleaning up my Debian/MINT/Ubuntu system

I run a virtual Linux Mint system within VirtualBox in a Windows 10 host. It runs out space this week. Here are some tricks that bought me more space:

sudo apt-get autoremove- Delete unused flatpak images:

flatpak uninstall --unused - Remove stopped containers and all unused docker images:

docker system prune -a. - Use

journalctl --disk-usageto check how much disk space is taken by journal, and usesudo journalctl --vacuum-size=200M(limiting by size) orsudo journalctrl --vacuum-time=5d(limiting by days of journal to be kept). - Delete

~/.cache. Do not do that to~/.local. - Use

df(disk usage, command line) anddu(folder disk usage, command line) andBaoab(GUI) to analyse disk usage and spot large files and/or directories.

The <U+FEFF> character shows up at the beginning of a file

When I download a file from Google Drive as plain text, I found a character shown

as <U+FEFF> at the beginning of the file, when using less on the command

line.

It is a zero width no-break space, known as Byte order mark or BOM. It can

mean many things, but in essence, it tells a program on how to read the text. It

can be UTF-8 (more common), UTF-16 (which is represented by FEFF), or even

UTF-32. See a quick tale about it here on

freecodecamp.org.

The character may cause problem for some programs (for instance, seqret of the

EMBOSS suite to convert sequences). To remove the character, use awk (discovered

via

GitHub):

awk '{ if (NR==1) sub(/^\xef\xbb\xbf/,""); print }' INFILE > OUTFILE

Preview Markdown files with mdless and grip

I learned two tools for working with and previewing Markdown files efficiently on the command line.

mdless works like less, but formats Markdown files nicely. It is written in

the programming language Ruby and is available via gem.

## install mdless

sudo gem install mdless

The python package Grip, the source code of which is available at GitHub joeyespo/grip, renders local README files before sending off to GitHub.

## install grip

pip install grip

Other gems in programming

- ggreer/the_silver_searcher is

a code searcher similar to

ack, agrep-like source code search tool. - Solving the file mode problem with git, which complains about old mode

(100644) and new mode (100755), discovered from

StackOverflow:

git config core.filemode false - tidylog: elbersb/tidylog provides logging for tidy functions.

library("dplyr")

library("tidyr")

library("tidylog", warn.conflicts = FALSE)

filtered <- filter(mtcars, cyl == 4)

#> filter: removed 21 rows (66%), 11 rows remaining

mutated <- mutate(mtcars, new_var = wt ** 2)

#> mutate: new variable 'new_var' (double) with 29 unique values and 0% NA

Other gems

A Mobile Robotic Chemist

The Robo Chemist, reported by Burger et al. on Nature, demonstrates what can be achieved by automation and robotics. See below a video.

What strikes me most is when Andrew Cooper, the principal investigator, said they had no experience with robotics three years ago when they started the project, and now they stand there with a very interesting product that may help chemists screen molecules. For sure the team is a very good one, but it also implies that the threshold of setting up robust robotic systems is becoming lower and lower. I believe this will create a self-feedback system where such robots will be quicker and better designed and engineered and applied. On the other side, human experts made most important decisions: what reaction do we work on? When the search is stuck, how to proceed with alternative strategies? Despite of the great achievement, these human factors remain critical for now in applying robots for scientific enterprises.

It remains the BIG questions: how can we human efficiently collaborate with such robots to translate ideas into more and better research products (including drugs)? And in which areas should we become better, for instance encoding intuition, fixing bugs, and connecting knowledge, to prepare for such collaborations?

A great read about this is written by Derek Lowe in this blog In The Pipeline.

Zettelkasten

I was inspired by the discussions on Hacker News and the post by Gregor Sturm to give a look at Zettelkasten, a way of managing and linking knowledge and information. I want to give it a try.

I already take my notes with Markdown using Vim. Here is a Vim plugin michal-h21/vim-zettel to make it easier to build a Zettelkasten system.

Interesting tools and ideas

- OneLook Theasurus, a reverse dictionary with Datamuse API, a word-finding query engine for developers.

- Building a self-updating profile README for GitHub with Actions.

- Why general artificial intelligence will not be realized by Ragnar Fjelland.

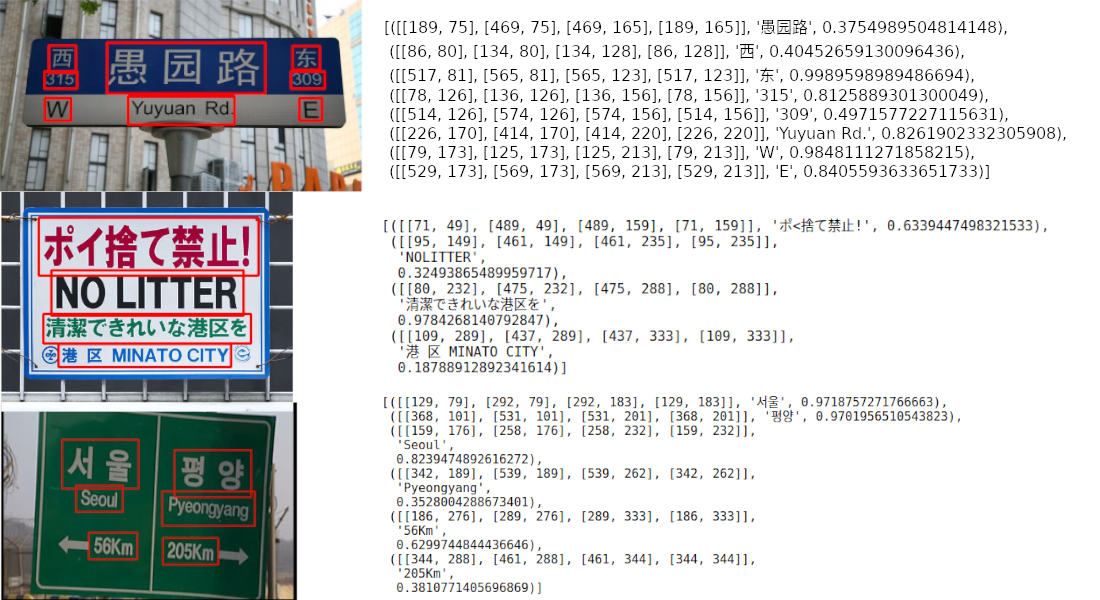

- EasyOCR, an optical character recognition (OCR) tool supporting many languages.

Sentence of the week

Stephan King on the happiness (or even ecstasy) of creators (On Writing, page 170).

Talent renders the whole idea of rehearsal meaningless; when you find something at which you are talented, you do it (whatever it is) until your fingers bleed or your eyes are ready to fall out of your head.

Happy weekend!